What will be the total cost if we load micro batches of data using bulk load from S3 to Snowflake at high frequency? Can snowflake load huge data files in a single go?

In this blog, we shall understand how bulk load works in snowflake. What’s the syntax to bulk load data into Snowflake, and the cost to load frequent data using Bulk Load from S3?

There are 2 ways to load data to snowflake:

- Bulk Load using <COPY INTO>

- Continuous Load using SNOWPIPE

In this blog, we will discuss the Bulk Load using Copy Into command. Refer here if you want to learn more about continuous load using Snowpipe in snowflake using multiple notification systems (S3/SQS).

Bulk data load is a 4 step process. Before we deep dive into each step, you can refer to below SQL script for a quick get-through.

-- Create file format to let system know the format

create file format if not exists single_quote_csv_format

type = 'CSV'

field_delimiter = ','

skip_header = 1;

-- Check if any storage integration already exists

show storage integrations;

-- Create storage integration with role arn received from AWS console.

create or replace storage integration single_quote_blog_s3_integration

type = external_stage

storage_provider = 'S3'

storage_aws_role_arn = 'arn:aws:iam::<id>:role/single-quote-blog-snowflake-s3'

enabled = true

storage_allowed_locations = ('s3://single-quote-blog/sample_blog_data/');

-- Describe storage integration to get external_id to be applied on AWS IAM

role.

describe storage integration single_quote_blog_s3_integration;

-- Create external stage with created storage integration

create stage if not exists single_quote_blog_stage

file_format = single_quote_csv_format

url = 's3://single-quote-blog/sample_blog_data/json_sample_files/'

storage_integration = single_quote_blog_s3_integration;

-- Create a table to load data into the snowflake table

CREATE TABLE if not exists single_quote_employee_data(name VARCHAR(255), dob DATE, designation VARCHAR(255), event_time TIMESTAMP);

-- Run copy command to load data into snowflake table

copy into single_quote_employee_data

from @single_quote_blog_stage/random_object_storage_data.csv

on_error = 'skip_file';

-- Verify the loaded records from load history table

select * from SNOWFLAKE.ACCOUNT_USAGE.load_history order by last_load_time desc limit 10;

-- Run count on the table to validate the records loaded into the table

select count(*) from single_quote_employee_data;

-- Check few records for sanity

SELECT * FROM single_quote_employee_data;Now that we are aware of the quick syntax and steps, let us understand each step in brief and how to connect with the S3 bucket using AWS console.

Step 1: Create File Format Objects

File format helps Snowflake understand how should the data in the file be interpreted and processed.

create or replace file format single_quote_csv_format

type = 'CSV'

field_delimiter = ','

skip_header = 1;Step 2: Create Storage Integration & Access Permissions

In layman’s terms, Storage integration enables a handshake between Snowflake and your S3 bucket.

Snowflake objects store a generated Identity and Access Management(IAM) entity for your external cloud storage(S3), and to complete a handshake you are required to add an entity provided by Snowflake in authorized keys/entities.

Storage Integration is a combination of multiple steps. This includes multiple steps in this sequence:

Create AWS Policy >> Create AWS Role & attach policy >> Create SF Storage Integration >> Update Trust relationship using External ID & user arn from storage Integration

Now let us understand each of the above steps in detail.

Step 2.1: Create AWS policy:

Create AWS policy, that will give permission to Snowflake to be able to access files in the folder (and sub-folders).

This includes the following steps in order:

AWS Console >> Search IAM >> Policies >> Create Policy >> Click JSON >> Add JSON permission >> Add policy name >> Save

Give a unique name to a policy like “singlequote_sf_s3_policy” as this will be used in a later step while creating the AWS role.

Paste the following JSON to the “JSON” tab and click “Next” to save the policy

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:GetObjectVersion"

],

"Resource": "arn:aws:s3:::single_quote_blog/*"

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": "arn:aws:s3:::single_quote_blog",

"Condition": {

"StringLike": {

"s3:prefix": [

"sample_blog_data/*"

]

}

}

}

]

}Step 2.2: Create AWS role arn

Create AWS Role for Snowflake. In order to create a role use the given steps in order.

AWS Console >> Search IAM >> Click on Roles >> Create role >> AWS Service >> Use case (S3) >> select policy created in last step >> Add name >> Click Create role

Step 2.3: Create Storage Integration

Now copy the “role arn” from the Roles screen of the AWS console. It must be something like this:

arn:aws:iam::<id>:role/single-quote-blog-snowflake-s3Now go back to the Snowflake database and run the following command —

create or replace storage integration single_quote_blog_storage_int

type = external_stage

storage_provider = 'S3'

storage_aws_role_arn = 'arn:aws:iam::<id>:role/single-quote-blog-snowflake-s3'

enabled = true

storage_allowed_locations = ('s3://single-quote-blog/sample_blog_data/');There are other options available on the above SQL command which can allow or disallow a few locations. Please refer official snowflake documentation for more information.

If you are not sure whether storage integration is already available or not. or if you do not want to mess up the current data pipelines running on production, then it is recommended to check all the storage integration by listing them using the above command and, then describing them to check if the storage location is already available.

Step 2.3: Modify the Trust Relationship for the Role

Once you have created the storage integration, Snowflake creates an external Id, which is required to be added to the trust relationship. An external id and Snowflake user arn are required to grant access between your AWS resource (i.e. S3) and a third party (i.e, Snowflake).

Describe storage Integration to get IAM USER ARN & External ID >> AWS Cosole Roles > Trust Relationships >> Add json for trust relationship

Copy this external Id from this command

describe storage integration single_quote_blog_storage_int;

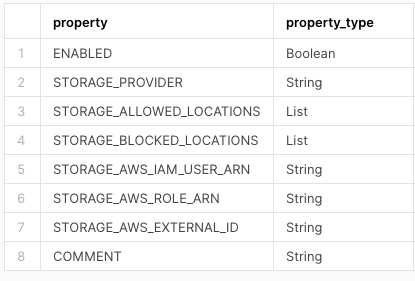

Get the “STORAGE_AWS_IAM_USER_ARN” and “STORAGE_AWS_EXTERNAL_ID” from the above result and modify JSON and paste it into the trust relationship.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::<id>:user/<id>"

},

"Action": "sts:AssumeRole",

"Condition": {

"ForAnyValue:StringEquals": {

"sts:ExternalId": [

"<external_id_1",

"<external_id_2>"

]

}

}

}

]

}Step 3: Create Stage Objects

A Snowflake stage specifies where the data files are stored(i.e “staged”) so that the data in the files can be loaded into a table. Stages can be external or internal.

create or replace stage single_quote_blog_stage

file_format = single_quote_csv_format

url = 's3://single-quote-blog/sample_blog_data/json_sample_files/'

storage_integration = single_quote_blog_storage_int;Using Copy command, You can load directly from the bucket, but Snowflake recommends creating an external stage that references the bucket and using that external stage instead.

Validate if the stage is correct by listing the files on the stage

list @single_quote_blog_stage;Step 4: Copy Data into Target Table

Before running a copy command, create a table, if not it does not already exist, followed by load data into the table, using the below command.

copy into single_quote_employee_data

from @single_quote_blog_stage/random_object_storage_data.csv

on_error = 'skip_file';Validate the successfully loaded data using the load_history table.

select * from SNOWFLAKE.ACCOUNT_USAGE.load_history

order by last_load_time desc limit 10;

Benchmarks & Cost for the Bulk Data Load

Let’s try to load some big files on the ‘M’ snowflake warehouse size.

Now, as discussed at the start of this blog, I wanted to load data onto the Snowflake at a higher frequency. These are the benchmarks and cost of the solution:

Here I am taking these considerations on high-frequency data.

- Each file has 1M records (5 columns) of size ~ 100MB.

- Each 1MB file takes around 8.2 Seconds to load into snowflake on XS warehouse size.

- Considering the Snowflake edition as Business Critical which cost $4/Credit. On Warehouse Size = XS, it will utilize 1 Credit/hour or $4/hour or $0.067/min.

- The minimum cost for every time warehouse starts (60 Secs).

This is clear from the costing above, more frequent data will add more cost to the snowflake cluster.

Points to be noted:

Few points to keep in mind while loading the CSV data into a database.

- Whenever we create a stage or storage integration, there is a hidden ID assigned to both and they are linked to each other. therefore, recreating a storage integration (using CREATE OR REPLACE STORAGE INTEGRATION) breaks the association between the storage integration and any stage that references it. Even if the names are the same, the Id generated will be different so the association will break. Therefore, it is necessary to establish all the connections again.

- Copy command can also be executed without storage integration, though this is not recommended, Syntax for direct copy command looks something like this or you can also create stage using credentials

-- Crendentials in copy command

Copy into single_quote_employee_data

from 's3://single-quote-blog/sample_blog_data/json_sample_files/random_object_storage_data.csv'

credentials=(AWS_KEY_ID = '<access_key>' AWS_SECRET_KEY = '<secret_key>')

file_format = (type = 'CSV');

-- Credentials in stage

CREATE STAGE single_quote_employee_data

URL = 's3://single-quote-blog/sample_blog_data/json_sample_files/'

CREDENTIALS = (AWS_KEY_ID = '*******' AWS_SECRET_KEY = '*********');- Snowflake expects each record in a CSV file to be separated by newlines and the fields (i.e. individual values) in each record to be separated by commas. If different characters are used as record and field delimiters, you must explicitly specify this as part of the file format when loading.

- While loading data into the database, snowflake looks for a ‘newline’ character for line separation and ‘comma’(,) as the default character for the field delimiter. You must specify if any other character is used.

- If fields and data are not aligned with the table schema, then Transforming Data During a Load can be used to match the schema with the table schema.

Conclusion:

The copy command is one of the most common methods to load bulk data into the database. It is one of the most prominently used commands across the industry. One of the challenges with the bulk load is that it is yet not ready to use. In times of zero code pipeline, another alternative is using the pipe to load data into the database. But that is the topic for the next blog.

[Writer’s Corner]

I am working at Innovaccer in Data Platform as Senior Engineering Manager and while writing this blog, I can proudly say that Innovaccer Data Platform has been rated Best in KLAS Data and analytics platform for 2 years in a row! Read more about the news here.