Can S3 event notification service scale enough to handle Billion high-velocity events and can SQS handle these events without any data drop? This blog is all about unveiling these use cases.

I have been working in the healthcare industry for the past 8 years, and one of the most interesting problem in this industry is skewness and variation in data. The day you think you have handled all the issues with the data, is the day you receive double what you faced earlier.

On daily basis, we receive more than 200 Million files and this number of files can reach up to Billions in a single historical data transfer. Another interesting part is the size of the file, which ranges from a few bytes to 20 MB.

What’s the Goal?

The problem/target is very generic.

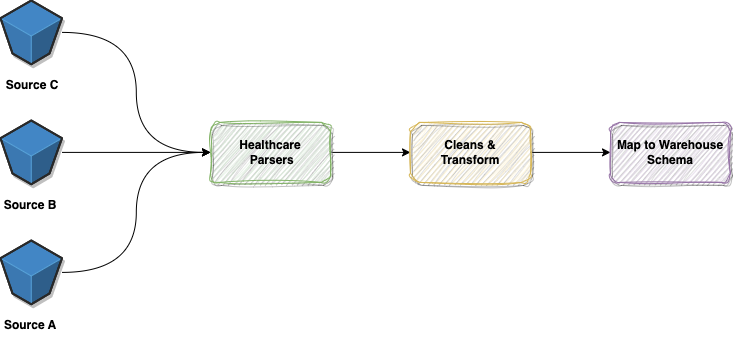

- Data is coming from multiple sources into the S3 bucket.

- Parse the data using standard parsers which can scale automatically to parse huge amounts of files.

- Clean and transform the data into the standard format.

- Map the transformed source schema to warehouse schema.

Challenges with the Process

As per the above diagram, there are 4 steps. For this blog, we will limit our discussion to S3 source file arrival and parsers only, where we are interacting with billions of files in chronological order.

The system faces multiple challenges when the number of objects in the S3 increases exponentially:

- Exploration of Data: Listing objects on the S3 bucket becomes very slow and tiresome for data engineers while exploring the data.

- Parsing and Cleansing: Parsing and cleansing of bulk data become challenging due to the slow listing of S3 objects (A single-threaded python program takes approximately 20 mins to list 1 million objects using standard AWS SDK while writing this blog).

- Reprocessing and Error Handling: Handling processed vs unprocessed data becomes tricky due to a large number of objects and in case of any error re-processing of data takes a lot of time.

- Metadata Management: Metadata management becomes tricky if the metadata(Size, Modified Time, Ingested Time etc) of objects is not already available(Read more about metadata management and its challenges here).

How to Solve the Above Problem?

The solution is also straightforward, but can we scale it enough to handle a billion objects?

We have decided to keep track of each file that is coming to the S3 bucket and save those in the database or any persistent storage, which can later be accessed by our parsers to overcome the above-mentioned problem.

Though this is clear from the AWS documentation that S3 event notification can get us where we wanted to reach, we wanted to check if it can handle huge and high velocity events where the number of files is coming at the speed of 4000–5000 objects/Second.

Experiment

In order to check the validity, a quick experiment is required, which includes these steps:

- Set up S3 and SQS with required permissions: Create an S3 bucket with the prefix required for the problem and SQS with the required permission to start receiving S3 events.

- Automate high-paced data load: Create a script that will create random data of 1Kb and stream data on the S3 bucket in files and another script to keep checking the delay of the messages on the SQS and their count compare to the message sent.

- Run and Monitor: Now run the scripts to load data and compare delay.

Its Result Time:

Once we have parallelized the above scenarios to start loading files. The results surprised me.

With the automation script, I was able to reach up to 2500 files creation per second on S3 and the maximum delay between file landing and SQS receiving the event notification for the PUT event was 100ms.

This is the sample message that you receive on the SQS for the PUT event:

Default {

"Records":

{

"eventVersion": "2.1",

"eventSource": "aws:s3",

"awsRegion": "us-east-1",

"eventTime": "2023-01-22T18:06:53.713Z",

"eventName": "ObjectCreated:Put",

"userIdentity": {

"principalId": "AWS:<PRINICIALID>"

},

"requestParameters": {

"sourceIPAddress": "<IP ADDRESS>"

},

"responseElements": {

"x-amz-request-id": "12345678AF67",

"x-amz-id-2": "wertyuiofghjkcvbn456789rtyui45678tyui56789rtyuiopertydfghjkcvbn"

},

"s3": {

"s3SchemaVersion": "1.0",

"configurationId": "s3_sqs_load_testing",

"bucket": {

"name": "s3_sqs_load_testing",

"ownerIdentity": {

"principalId": "<PRINCIPAL ID>"

},

"arn": "arn:aws:s3:::s3_sqs_load_testing"

},

"object": {

"key": "s3_sqs_load_testing/base_path/1st_iterator/20230122/test.csv",

"size": 14311,

"eTag": "987fghjk789hj89",

"versionId": "SdgdghjdbhfjhYUhjdhfj",

"sequencer": "0063CD7B3D9FA2B9E6"

}

}

}

]

}

Estimated Cost for the Problem:

As shown in the above diagram, Pricing for the solution is nominal compared to what it is solving for your metadata management system.

Above cost considers the cost for 1 Million objects. We wanted to get the cost for billion objects. An estimated cost for 1 Billion objects will be around 5.857*100 = $ 585.7.

This is the total cost of the system, but you are already getting files on S3 that cannot be removed so we are adding approximately ~15% extra cost to the current S3 system. Though the interesting part is, if you look at the whole journey from parsers (which includes listing the same objects multiple times) and metadata management(which list files for data engineer to explore) then the cost will reduce further to approximately ~10%.

Now, this extra 10% cost has increased the total efficiency of the system by 2.5X and parsers are able to finish the job in 2.5 times less than what it used to take, which is a good win.

Limitations of the Current Approach

Though we had success in the above experiment, still there are a few limitations that might be a deal-breaker for few use cases. Those are:

- The Amazon SQS queue must be in the same AWS Region as your Amazon S3 bucket (mention).

- Enabling notifications is a bucket-level operation and notifications need to be enabled for each bucket separately, though events can be published on a single queue (mention).

- After you create or change the bucket notification configuration, it usually takes about five minutes for the changes to take effect (mention).

- When notification is first enabled or notification configuration gets changed, a S3:TestEvent Occurs. If use case forces to change configuration very frequently then additional changes in consumer is required to skip these messages (mention).

- Event notifications aren’t guaranteed to arrive in the same order that the events occurred. However, notifications from events that create objects (

PUTs) and delete objects containing asequencer. It can be used to determine the order of events for a given object key. If you compare thesequencerstrings from two event notifications on the same object key, the event notification with the greatersequencerthe hexadecimal value is the event that occurred later. - Across events for different buckets or objects within a bucket, the sequencer value should not be considered useful for ordering comparisons (Check this stackoverflow answer).

- If you’re using event notifications to maintain a separate database or index of your Amazon S3 objects, AWS recommends that you compare and store the

sequencervalues as you process each event notification. - Each 64 KB chunk of a payload is billed as 1 request (for example, an API action with a 256 KB payload is billed as 4 requests)

- Every Amazon SQS action counts as a request. The

GETper-request charge is the charge for handling the actual request for the file (checking whether it exists, checking permissions, fetching it from storage, and preparing to return it to the requester), each time it is downloaded. The data transfer charge is for the actual transfer of the file’s contents from S3 to the requester, over the Internet, each time it is downloaded. If you include a link to a file on your site but the user doesn’t download it and the browser doesn’t load it to automatically play, or pre-load it, or something like that, S3 would not know anything about that, so you wouldn’t be billed. That’s also true if you are using pre-signed URLs — those don’t result in any billing unless they’re actually used, because they’re generated on your server.

Conclusion

Though there are a few limitations for some use cases when you want to take it to production, but the experiment is successful. I will list down what I did achieve:

- The overall speed of file exploration by data engineers and stewards increased upto 1.5x, as the listing was easy for them and they can group files on the basis of regex pattern which is not very easy in the case of direct S3 exploration (Read more about data Engineer vs Data Stewards here).

- The overall speed of parsing files increased by 2.5x, as the listing can be avoided during parsing and parsers can be scaled by pre-determined scaling factor (on the basis of #files).

- Better Data Democratization for the organization by enabling rich searching over data.

- … and many more.

[Writers Corner]

Data Platform and analytics is a basic entity not only for IT but also for non-IT companies and object storage is one of the most common attributes of a Data Platform. Keeping object storage healthy(un-messy) should be taken care of with the highest priority.